Every day, we see that AI models are evolving fast. Choosing the right one can make all the difference. Today, we’re diving into LLM prompt tuning and model comparison (ChatGPT vs Claude) to help you understand each model’s strengths and weaknesses.

Before investing in an AI model, we have to know what works best for our needs. Stay informed and make the right choice!

What to expect?

A blog on LLM Prompting techniques to get the best results in various scenarios.

A Snapshot of model comparisons

LLM Prompting Tunings

Getting the best results from LLM models isn't just about picking the right model, it also depends on how you question it. Effective prompting can improve responses, while common mistakes can lead to misleading or low-quality outputs. This blog by AI ML Universe covers Essential prompting techniques, common pitfalls, and how to navigate them, making it a must-read for anyone using LLMs. For those looking to go deeper, the Prompt Engineering Guide has listed advanced, fine-grained techniques (not essential for daily use) to refine your AI interactions to suit your niche use case.

Claude’s Sonet vs OpenAI’s ChatGPT

Today, I will compare the two famous LLMs in different aspects, as shown below. Let’s understand their capabilities and choose the best model based on our use case.

Complex coding needs (Short code formats with advanced syntax needs, Longer code generation capabilities using OOPs capabilities)

Code understanding (Documentation, debugging and optimising code)

Math reasoning capabalities

Complex Coding Needs

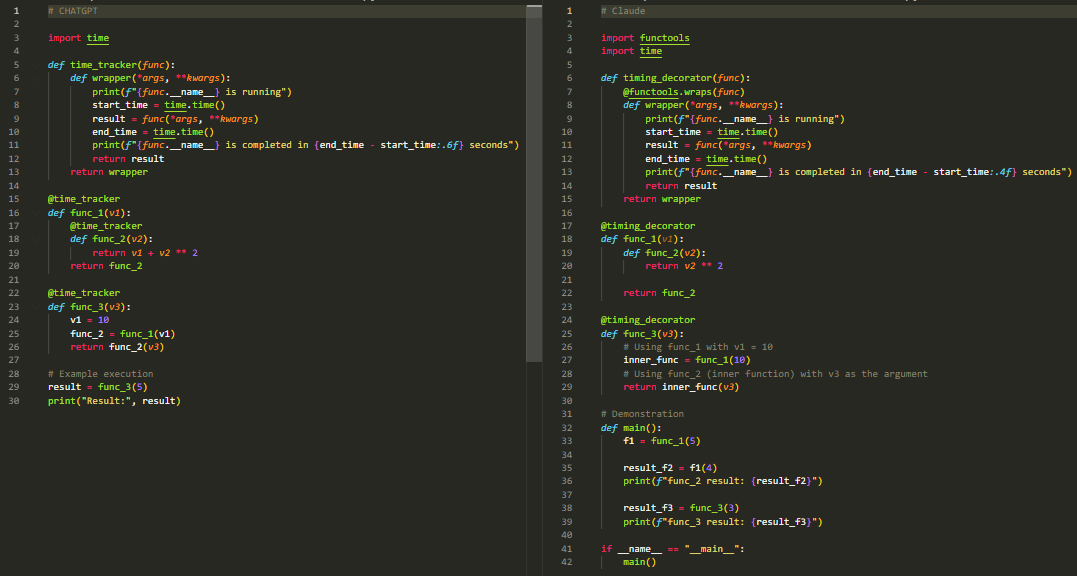

Short code formats with advanced syntax needs

Prompt:

Give me a Python Code, which will have three different functions func_1, func_2, func_3.

func_2:

The func_2 should be an inner function of func_1 Parameters: v2

func_1:

The func_1 should only accept v1 as an argument.

func_3:

func_3 should accept v3 as argument func_3 should execute v1+v2^2 using func_1 and func_2 where v3 equal to v2 and v1 should be 10 in the scope of func_3 no other variables should be explicitly defined in func_3 other than v3

While running each function, I should get std output as “func_x is running” and “func_x is completed in y seconds” in new lines. The code to calculate start time and end_time should be written only once and should not be repeated for each function.

Expected response: Use Python closures to resolve the issue of not creating a variable in func_3 and perform computation in func_2. Use wrapper to do calculate execution times and print them.

Response:

When we compare both responses, we can see that the ChatGPT response is better even though it failed to comply with the given instruction (Do not create a variable in func_3). But its logic is on point, and the response is precise. When we look at Claude’s response, it failed to create logic, while it followed all the instructions. So, my expert opinion, ChatGPT won because it followed all instructions relating to the logic of the prompt, and the minor syntactical errors can be easily fixed.

Based on the above criterion, we have ranked them in different dimensions. Head to our Medium post to see the detailed comparison.

If you have any queries or suggestions, write to us at [email protected].

Consider sharing this and recommending this to your friends!